SPACE NAVIGATION

We are continuing the series of posts describing Zet Universe Interface Language. In this post we will cover the basics of things movement and navigation inside Zet Universe space.

Space: The final frontier. These are the voyages of the Starship Enterprise. Its five-year mission: To explore strange new worlds, to seek out new life and new civilizations, to boldly go where no man has gone before.

Star Trek: The Original Series

Space: The final frontier. These are the voyages of the Starship Enterprise. Its five-year mission: To explore strange new worlds, to seek out new life and new civilizations, to boldly go where no man has gone before.

Star Trek: The Original Series

Today we will cover the basics of navigation in Zet Universe space.

As it was noted in the previous post, it is a two-dimensional zoomable infinite space that plays the fundamental role in user interface interactions. In the analogue with the real world’s Universe, this space contains everything in it. In Zet Universe language we use “thing” to describe any living concept from the real world; a thing is located the space.

Zet Universe is being designed to be used using different input methods, including mouse, pen and multitouch (in the beginning). There’s a dilemma on how to design these interactions for multiple input methods; we can either try to optimize interactions for each method, or use the same interaction gestures across all methods. Both approaches have their own advantages and disadvantages; in order to better understand them, there is a need to clearly distinguish them from each other. Hal Berenson, ex-Microsoftie, who until recently was Distinguished Engineer in the company, wrote an excellent article covering this topic, stating that there are three main attributes that are defining applicability of input method to the given task:

These three attributes, density (how much information can be conveyed in a small space), precision (how unambiguous is the information conveyed), and how natural (to the way humans think and work) can be used to evaluate any style of computer interaction. The ideal would be for interactions to be very dense, very precise, and very natural. The reality is that these three attributes work against one another and so all interaction styles are a compromise.

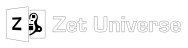

The way navigation in two-dimensional zoomable infinite space is employed heavily depends on the distance required for user to cover to get from the beginning point to the destination’s one. Zet Universe enables user with a simple dragging metaphor that is the same across all three input methods (currently supported) in order to finish the navigation process to reach the final position. Unfortunately, also it is a simple and effective way when navigation is needed to be done within one-two, maybe three screens from the current position; it becomes complicated to make a long-distance “jump” as user needs to drag through many screens to get the final point. This problem is solved by providing the so-called “Big Picture” view that enables user to see the higher-level map where only names of things clusters are shown:

Infinite space at normal scale; all things are visible; we call this normal mode an “Infinite Space”

Infinite space at semantic zoom scale; only group headings are shown; we call this mode a “Big Picture”

Thus, user is able to navigate using mouse, pen or touch by directly clicking (pressing stylus down, tapping) on the part of the space (free of things), moving and unpressing the mouse button (or unpressing stylus, or finishing touch gesture) to finish the navigation in the space. This is working in an absolutely same manner in both modes – “Infinite Space” (a normal one) and “Big Picture”. Zooming gesture (mouse wheel for mouse, pinch-and-zoom for touch) ensures seamless change of the view from big picture to details view and vice versa. It is important to note that we do not provide any simple way to zoom in/out for pen input method.

SELECTING THINGS

ONE THING

In the old world of desktop environments the typical approach to choose a thing on the desktop was to point-and-click. In the modern NUI world it is simply tap. We support both approaches to make the interface natural in both interaction modes:

MANY THINGS

But how can you choose several things simultaneously? In the old world you’d just click in the free area and make a rectangular selection using your mouse. What about NUI world? Your fingers are good enough to move things around, but drawing a thick line to draw a free-form selection by fingers is a hard job. This is the task that requires precision. Thankfully, in Windows slates you are empowered with a pen (or stylus), and that’s the way we provide users with this functionality.

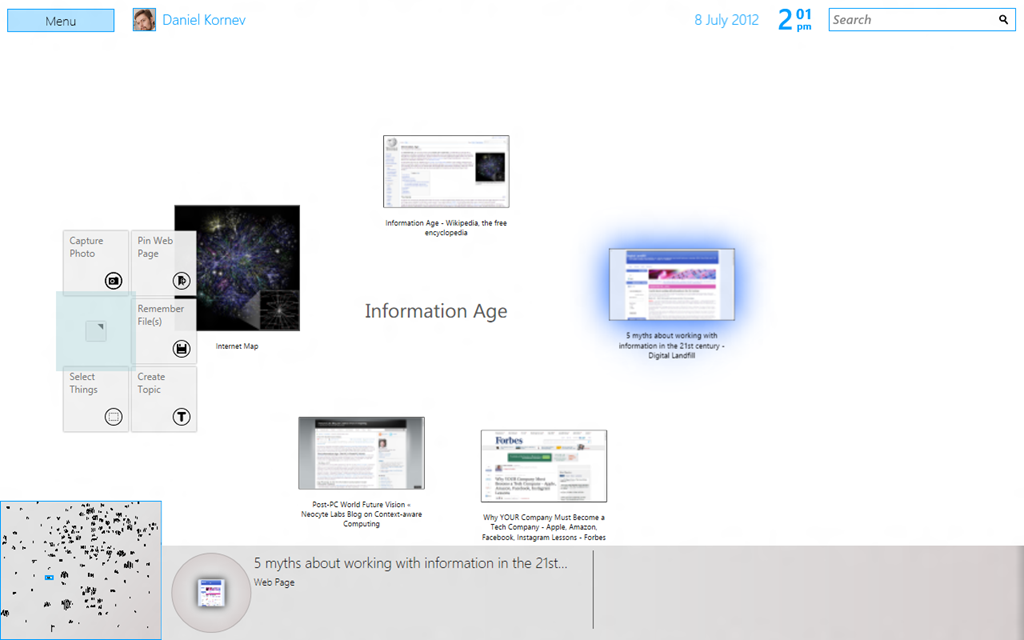

To choose more than one thing, use the “Selection Things” button in the Actions Menu:

To choose more than one thing, use the “Selection Things” button in the Actions Menu:

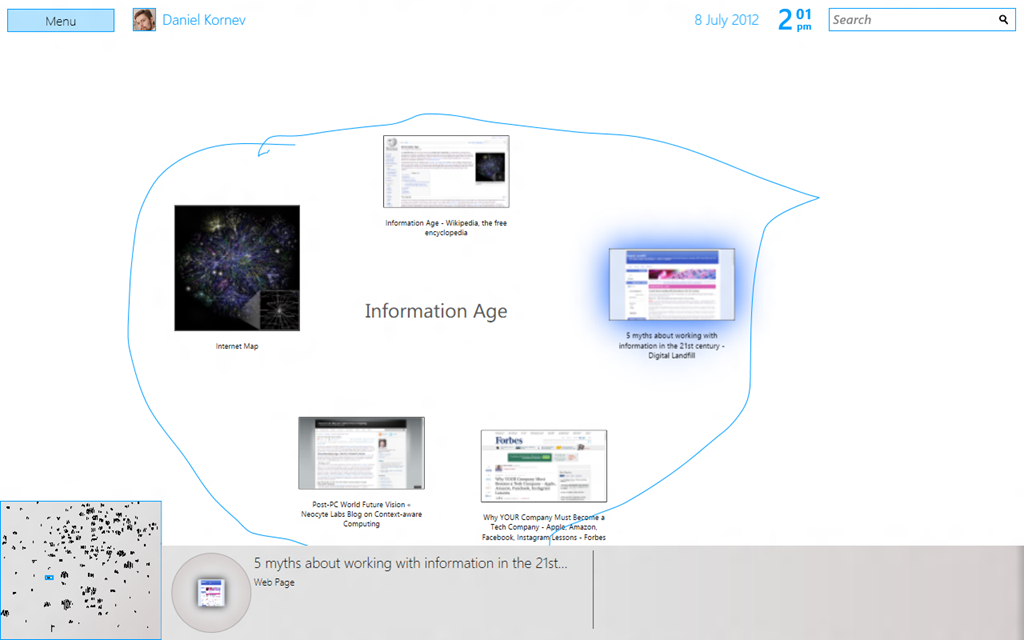

Once clicked, it becomes green and you are now in the “lasso selection mode”:

Lasso Selection in progress

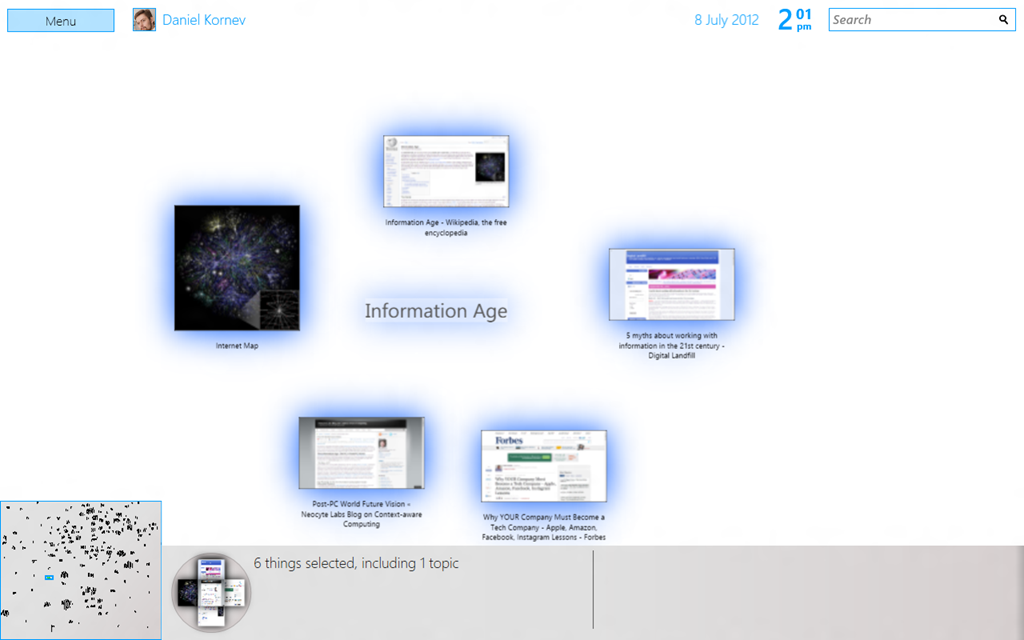

Several things are now selected

SELECTING THINGS: DRAWER

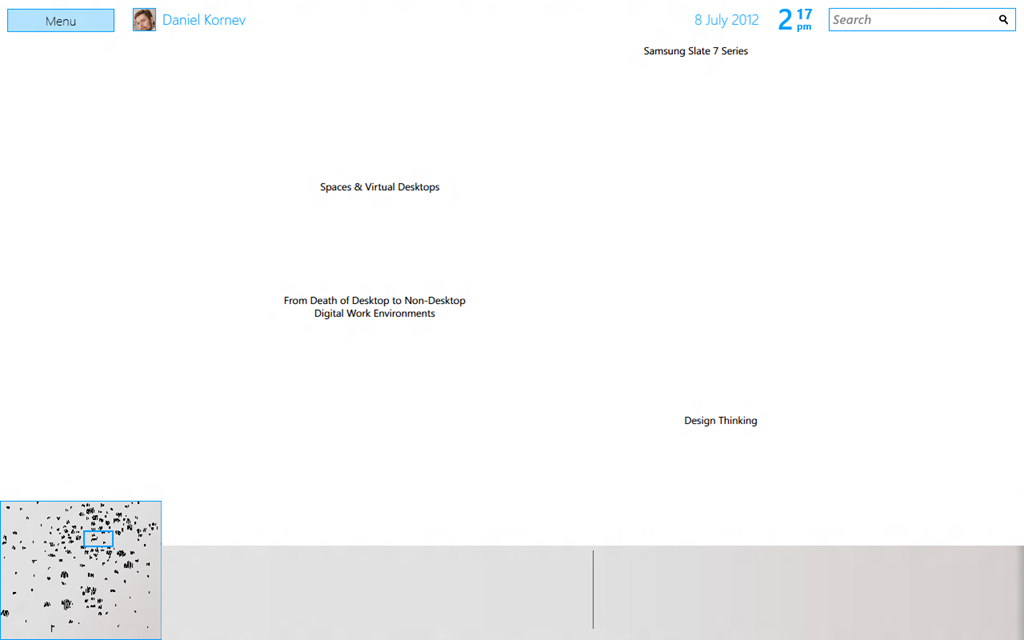

Once thing(s) are selected, the drawer part of the interface appears:

One thing is selected; its name, classification and thumbnail (if available) are shown

Five things are selected; now only the quantity of selected things is shown being a hint for the user of how many things she selected

Sixteen things are selected; as topics do not have any thumbnails, thumbnails are shown only for those items which have them; however there is a hint for the user that she got a topic in addition to other items.

The drawer is playing the role of the visual clipboard, helping user to know what’s selected right now. This part of the system is heavily influenced by the approach common among realtime strategy games where selected units are shown on the “drawer” for exactly the same purpose.

MOVING THINGS

Navigation in both modes is the same; the process of moving things on the short and long distances is not. Why?

The process of moving things on the short distance is pretty similar to the one used for space navigation. Point-and-click (tap, stylus down), drag, release mouse (pen, touch). Done. However, when the space has sufficiently large amount of things in it the need to find a better metaphor to move things on the long-distance becomes more important. To find one we started research in several directions:

It is known that RTS games initially used some ideas of desktop environments, namely the technique of “clicking and dragging” to move units around. However, the task of moving things around has different meaning in these games, and the idea of “click on unit, move on map, right-click to point unit to get to the new location” quickly became the standard in these games.

However, in Natural User Interfaces paradigm user expects that all content is directly interactive; specifically, user can drag content with her fingers. At the same time as it was noted above, it is annoying to drag the same thing over a long-distance, thus we needed to find the compromise.

Below is described the approach we’ve taken based on these ideas and considerations.

The process of moving things on the short distance is pretty similar to the one used for space navigation. Point-and-click (tap, stylus down), drag, release mouse (pen, touch). Done. However, when the space has sufficiently large amount of things in it the need to find a better metaphor to move things on the long-distance becomes more important. To find one we started research in several directions:

- We wanted to find the easy way to transfer things that is already known to the audience,

- We wanted to make the metaphor itself easy,

- We wanted to make sure it will fit into the NUI vision of Zet Universe and modern NUI trends (interaction is done directly with content).

It is known that RTS games initially used some ideas of desktop environments, namely the technique of “clicking and dragging” to move units around. However, the task of moving things around has different meaning in these games, and the idea of “click on unit, move on map, right-click to point unit to get to the new location” quickly became the standard in these games.

However, in Natural User Interfaces paradigm user expects that all content is directly interactive; specifically, user can drag content with her fingers. At the same time as it was noted above, it is annoying to drag the same thing over a long-distance, thus we needed to find the compromise.

Below is described the approach we’ve taken based on these ideas and considerations.

SHORT DISTANCE: "TAP-N-MOVE"

LONG DISTANCE: "TELEPORTATION"

The same approach is used for both one and many items in case of long distance transfer:

- User should select one or more things,

- get to the new destination using a series of pan-and-zoom operations,

- and make a right click or a long tap in the destination point to get all selected things “teleported” to the new destination.

When one thing to be selected:

When many things to be selected:

- tap on it,

- directly drag it within the boundaries of the screen being as precise as the input method permits that,

- leave it at the desired place

When many things to be selected:

- click on “lasso selection” button,

- draw a free-form line around these things as described above,

- make either a right-click or a long tap.

WRAP-UP

So, today we discussed the way user can navigate in her Zet Universe of information, select one or many things and move them within the short distance (within the screen boundaries) or within the long distance.

Now, If only we could teleport to a new geographical position in the Earth with the same simplicity in the almost zero-time as you can move information in your Zet Universe!

Now, If only we could teleport to a new geographical position in the Earth with the same simplicity in the almost zero-time as you can move information in your Zet Universe!

RSS Feed

RSS Feed